By now, we have all noticed the overwhelming trend to ditch performance rating scales and instead, have performance appraisals and performance management be centered on quality conversations and continuous feedback.

Organizations like Adobe, Deloitte, Microsoft, Accenture and even GE are all examples of organizations that committed to ending using a single number to define performance.

This makes complete sense — in theory. But what we are seeing on the ground is that practically, this isn’t working for many organizations. At least not by simply getting rid of ratings. We need to look closely at the fine print of these ‘no rating’ processes and focus on the fact that for many of the high-profile organizations that threw their ratings in the garbage, the switch took place alongside a series of new programs and procedures to engage their workforce and develop performance, as well as determining compensation.

An organization just looking to erase ratings and replace them with comment boxes shouldn’t be surprised to discover it doesn’t actually improve things much.

Getting back to it – are we throwing out the baby with the bathwater? Is there such a thing as an ideal rating system that allows for fair and accurate performance measurement, does not negatively impact employee engagement but still provides the metrics organizations need to monitor performance and plan for the future? Our answer is: “Yes, but.”

YES: It is possible to optimize how ratings are defined and used so as to minimize any negative impact on engagement and performance, while still allowing organizations to monitor what is happening.

BUT: In order for it to make a positive impact on employees and the organization, these ratings and their delivery need to take place alongside a re-tooled performance management process.

Let’s start by analyzing the good and the bad sides of ratings and their effect on performance.

Ratings: The bad

There is no question that we have all had a love-hate relationship with ratings over the years. They can be subjective and the less than ideal rating scales can be downright confusing. Our experience tells us that people are also biased when handing out ratings and they tend to be more nice than accurate.

So what do the numbers say? What is actual impact on businesses who use ratings?

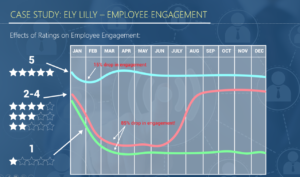

Let’s pause for a case study: In a webinar last year, talent management consultant Edie Goldberg presented some research compiled by Ely Lilly that was part of its business case for eliminating ratings and moving to a continuous performance management process.

Based on monthly pulse surveys, the company noticed that immediately following the annual performance rating process, there was a significant drop in engagement for 80% of the employee population. And that drop stayed down for months. A drill down on this data also revealed that it was employees who were rated a 4 or lower (based on a standard 5 point scale) who had the biggest drop in engagement.

Based on monthly pulse surveys, the company noticed that immediately following the annual performance rating process, there was a significant drop in engagement for 80% of the employee population. And that drop stayed down for months. A drill down on this data also revealed that it was employees who were rated a 4 or lower (based on a standard 5 point scale) who had the biggest drop in engagement.

What’s even more interesting is that even employees with a “5” rating (the top rating) also experienced a dip in engagement, though they recovered from the drop quickly. The rating process was causing a drop in engagement and performance for more than half the year.

Because the annual review was the only formal feedback employees received, Eli Lilly concluded that decreases in employee engagement after being rated have as much to do with employees being surprised by the feedback as it does the actual rating.

Ratings: The good

There is a rainbow: For all of their faults, ratings can deliver some value.

Ratings offer a quantifiable view of performance. This means an organization can report on its talent and use trends to plan improvements. Which managers are most effective? Which employees are most likely to become leaders? Are there severe skill gaps we need to address?

Some argue that the ratings themselves are not accurate and thus the data cannot be trusted, but overall, many organizations are refusing to let go of any, let alone all, visibility into their largest investment and determinant of success – talent. Instead, companies are opting to improve the accuracy and validity of ratings. Besides providing data and metrics, ratings are an indicator of relative performance, which makes it easier for managers to focus development discussions and link pay decisions to results.

Ratings: The reality

Performance ratings in their multiple forms are tools, and at many  companies they’re despised not because the tools are bad, but because the users aren’t being given the right tools or the information needed to use them properly.2 As such, ratings are being given inconsistently throughout the organization and the result is as expected – managers don’t like giving them and employees don’t like getting them.

companies they’re despised not because the tools are bad, but because the users aren’t being given the right tools or the information needed to use them properly.2 As such, ratings are being given inconsistently throughout the organization and the result is as expected – managers don’t like giving them and employees don’t like getting them.

Getting the best of both worlds

Redefine ratings

For convenience, the same rating scale is often applied to all areas of an employee’s evaluation; from goals to competencies. This is more often than not a 5-point rating scale (5 – Outstanding, 4 – Exceeds Expectations, 3 – Meets Expectations, 2 – Needs Improvement, 1 – Unacceptable). The problem is that you can’t rate certain evaluation criteria using this scale, and it isn’t fair to expect managers and employees to do so.

Goals, for instance, cannot be “Outstanding” or “Needs Improvement.” They are more appropriately “Achieved,” “In Progress,” “Deferred,” or “Cancelled.” The same is true with development items or accomplishments. We suggest re-defining rating scales to make them specific to the criteria being rated.

Let’s ditch the term rating altogether and instead start using a text “status” scale to replace the 5-point scale so employees can be evaluated on actions instead of subjective views of quality. This allows tracking to take place while removing some of the negative stigma associated with ratings.

To take it a step further, the user should only see the text-based-rating, but having a numeric scale assigned on the back-end will allow for easy reporting.

Goal status scale

- Goal Achieved (3): All milestones and success measures have been achieved

- Active Goal (1): The goal is still in progress, some milestones may have been achieved

- Goal Not Met (0): Timeframe for goal has been met; however, some or all milestones and success measures have not been met.

- Goal Deferred (-): For timing or business reasons, this goal has been deferred

Development item status scale

- 100% Complete

- 75% to 99% complete

- 50% to 74% complete

- less than 50% completed

- Not started

Observation frequency scale

For areas of an evaluation that have to do with soft skills and require a little more subjectivity, we suggest using a behavior-based scale where employees are evaluated on the frequency of the behaviors being observed.

- Consistently Observed: This competency/skill is observed on a constant basis; everyone in contact with this person would observe excellence in this area

- Observed: This competency/skill is observed, please continue to focus on it so that it is observed constantly without exception

- Observed Sometimes: The competency/skill is observed on an infrequent basis, there is a clear development opportunity here.

- Seldom Observed: Needs Immediate Improvement

Ditch the stigma of ‘meets’

Everyone wants to be unique and excel. This is why employees get their feathers ruffled if they are shot into a “Meets Expectations” category. I remember my grade-school report card saying “Satisfactory” and thinking, “Hmm. That doesn’t sound good.” The same goes for “meets.” We suggest using an observed or achieved rating scale so that an employee can feel happy and proud about their performance in areas being “Observed” or “Achieved.”

Hide numbers

We suggest displaying only text ratings instead of having numbers or text and numbers. This way, employees are not associated with a number but instead are a relative category of performer. This allows everyone involved to focus on the behaviors and observations instead of getting hung up on a number. If you are in the process of finding a new performance management system, be sure to check if it can accommodate text-based ratings with a defined scale on the back-end for reporting.

Ghost ratings

Something we are seeing more and more of are “ghost ratings,” where a manager is asked to provide an overall rating for an employee that the employee does not see. This allows organizations to report on the status of performance and potential, while keeping the review open and conversation-based for the employee.

Remove the shock factor

A little feedback goes a long way. The Eli Lilly research was based on engaging in performance management once a year. There is no doubt that employees experience a level of shock when feedback and ratings are saved up for 12 months and then thrown at them.

It is essential that organizations remove this surprise by re-tooling traditional once-a-year reviews with continuous feedback and status updates. Quarterly meetings, performance logs, ongoing coaching and feedback are ways to not only engage managers and employees in performance-boosting dialogue throughout the year, but it removes the air of mystery surrounding appraisals. Both parties enter into the final review knowing more or less what to expect, and the conversation can look ahead instead of scrambling to come to an agreement about the past.

Warning: This might sound easy; just get managers to give more feedback, no problem. The reality is that ongoing feedback is probably the most important part of an effective performance management strategy and yet it is the most difficult to enforce and monitor. Managers must be trained to give effective feedback, and a culture of feedback has to be created, led by those at the top of the organization.

Focus on the future

Research has found reviews that focus on the future instead of recapping the past seem to be the most effective. And it makes sense! If employees are given more frequent status updates throughout the year, and the end of year review is treated as more of a formal re-group and development discussion, then employees and managers are going to get more value out of it and it won’t just be perceived as a gut-wrenching report card meeting.

Overall, it is possible for an organization to dampen the negative impact of ratings while still experiencing the benefits of them but at the end of the day, your organization’s culture and needs should shape how evaluation criteria is defined and measured.