Is artificial intelligence really HR’s friend?

Can it really speed up all those HR monotonous jobs you hate to do – you know, the ones that pull you down and stop you from doing the strategic stuff?

Better, still, can it solve some of the biggest issues some tasks still present – such as issues around perpetuating bias?

In this series we’re going to start looking at different AI tools to try and answer exactly some of these questions.

Every few weeks, we’re going to look at and independently review a piece of HR AI.

First, our methodology:

The process works like this:

- We book a walk-through of a specific tool with an AI provider.

- We get a demonstration of its capabilities.

- If we want to probe them on something – asking the sorts of questions we think HR professionals want to hear – we do, and they have their opportunity to respond.

- The verdict below is completely our personal assessment. It’s based entirely on what we see and whether we think our questions have been satisfactorily answered. The vendor has had no input.

So who’s up first?

Today we’re looking at: Datapeople

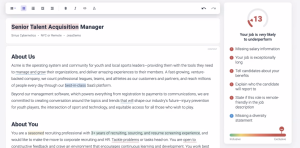

What is its big USP?: Datapeople is a job ad description tool that claims to be able help recruiters/HR professionals write job ads that appeal to the broadest people and match those to people with the right qualifications. Customers using it claim to have attracted twice as many qualified candidates; 79% more qualified female candidates; and they also fill their roles 18 days faster. The tool assesses the language job ads pasted into it already have, and in doing so, claims to be able to identify areas where bias may be putting some candidates off. As such, it argues ads that go through its tool come out at the other end being far more inclusive. It also says brand consistency from ad to ad (and different recruiter to recruiter) can be more carefully controlled.

Who took us through the tool: Jessica Hoffman, Datapeople’s head of marketing

The context: Jess says: “Recruiters/hiring managers are typically required to hire episodically, and so they often don’t have the expertise to create job ads that have consistent brand messaging and will attract the widest pool of people. Our tool gives them the intelligence they need to craft better ads – almost instantly – that puts them more in control of the hiring process. Job posts can often make or break hiring, and better results can be achieved with better job descriptions.”

What we saw:

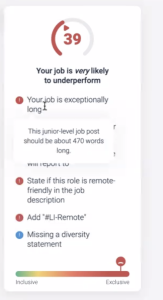

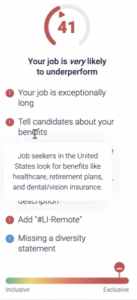

The tool works by looking at some existing copy, and giving it a ‘score’ in terms of it being likely to underperform or perform.

It flags, for instance, things elements that are missing (such as salary information for example), or indicates that the ad is too long or doesn’t explain basic candidate expectations – such as who they’ll report to.

There is also a ‘inclusive’ or ‘exclusive’ bar underneath this. The AI scans the copy it can see to determine how inclusive in language the ad currently is.

The goal of the AI tool is highlight areas the recruiter can quickly change that will bring the overall score up.

Jess says the target to reach is 85.

The example we’re shown starts at a lowly 13 – which is not unlike many ads, she says.

The scoring system is claimed to be based on the software having tracked more than 65 million job posts for language and job ad attributes that it says will help/hinder capturing a diverse candidate pool.

So…let’s start.

Our job is to raise the score, and immediately highlighted by the AI is the title of the job in the first place – (see pic) – which says ‘Senior Talent Acquisition Manager.”

The AI has a problem with this, says Jess, because it’s looked at the requirements for that job listed further down, and whether the educational requirements listed calibrate – or ‘fit’ – with the job ad title.

It’s decided that the title of the job seems more senior than it really is (or what qualifications are being asked for). Says Jess: “Job titles have an outsized impact on response and engagement with candidates, and here the title may put people off if they don’t see themselves as being as senior as the job suggests they should be. The title has to make sense to the person being targeted.”

Just by downplaying the seniority to what it actually is, is enough to move the meter from 13 to 29.

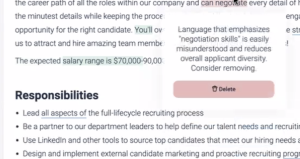

Using more commonly accepted parlance (ie dropping the clumsier word ‘acquisition’ and instead saying something more expected to readers – such as ‘recruiting manager’), moves the score even more in the desired direction – to 39.

The AI has also detected this is a New York-based job, and so it knows there is a new law to include salary details.

When this is added (see below), the score doesn’t change though. Jess says this is because it’s not blanket best practice, but just specific to this geographical area.

The next red flag to deal with is the length of the ad. Jess says lengthier ads are known to attract unqualified people who simply don’t read it all, while qualified candidates – the people companies want – will be deterred by how much wading through they need to do. It suggests the ad is around 470 words too long!

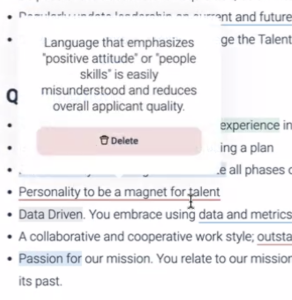

It also flags up potentially bias-loaded words that should be changed – such as ‘seasoned’ (which could over-emphasis lots of experience, even for this non-senior role). It also suggests words like the candidate needing a ‘positive attitude’ or ‘people skills’ could be misconstrued and potentially reduces applicant quality.

Soft skills are also called out as potentially being a barrier – such as ‘outstanding interpersonal skills’, ‘communication capabilities’ and ‘passion for’.

Also highlighted is a phrase that the desired candidate should have a personality that is ‘magnet for talent’ [see later for more on this].

After deleting/changing these statements, the score improves – not by much – to 41.

We’re still some way off getting to 85 – but we’re going in the right direction.

The next red flag is not mentioning benefits. We add these in, and the perform-0-meter nods to 49.

At this point we’re starting to run out of time, but there’s other things highlighted that can be changed, and will continue, promises Jess, to move the score higher to the magic 85 level.

Conclusions:

For those who have never seen AI at work, this simple, step-by-step process of how to make a job ad read better is intriguing.

As a journalist – who understands the power of poorly crafted words – I ‘get’ that cutting length, being more succinct and avoiding disingenuous words is important.

I also like the smart checking of content – for instance, against location, and even job description – and it deciding that things don’t quite match.

I can imagine how the original recruiter has initially tried to make this job sound like the most amazing, and wonderful, and responsibility-laden role ever – probably to make it seem grander than it is. It’s a trap many fall into, because over-embellishments like these kill it. Here the AI is clearly not going to be fooled. Well done, I say, for calling out the recruiter’s liberal play with the truth, and getting him/her to pull back and concentrate on what the job really is.

Alerting recruiters to things that have been missed out (another feature raised was the need for a diversity statement, plus specific benefits that matter more to people than those currently included), is important too. Sometimes these can be easily forgotten, and the impact of not having these can be significant in terms of the lower response it creates.

Alerting recruiters to biases is again, something to be applauded.

I can see how correcting all the points raised can make an ad better. It’s effectively doing what I would call ‘editing’ – but it’s something recruiters may not be used to doing. They may not also be cognizant of the way language can change someone’s instinctive response to something (ie making them feel excluded).

There’s also no doubt that following these rules helps bring about a sort of consistency to job ads no matter who has written them across the company.

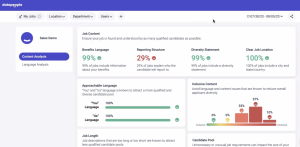

Jess showed me a dashboard view is creates of all live job ads (see below), where the user can see if they are using approachable and inclusive language; whether the content is consistent; and is inclusive etc.. all the way across the board. This will improve consistency of communications, and make an ads’ success less dependent on who’s written it.

Any reservations?

Like any AI, you sort of have to put your faith in the algorithm – that is, you do kind-of need to accept (without question), that the changes you are making to improve the scores do indeed make the ad more appealing – and most importantly, that it become more attractive to a diverse and correctly qualified pool.

This does require a leap of faith that some might find harder to make than others.

I had a particular problem with the AI wanting to strip out what I might call the more ‘journalistic’ style of the original copy.

The recruiter who first wrote this clearly wanted to inject a bit of flair/individuality when he/she mentioned their perfect candidate wanting to be a ‘magnet for talent.’

Call me old-fashioned, but I thought that was quite a nice turn of phrase, and if there’s one thing I think job ads could have, is more fun, and individuality that gives a sense of the culture of that company. I’d rather see this any day, than a dry, and drab-reading ad, that might well be correct, but lacks a little…shall we say, ‘sparkle’.

I’ve always thought the best ads are also those that stick in the mind. Sure, get rid of bias language, but getting rid of language that creates a good impression, has some stand-out feature, and is more playful and less mechanical? I’m not so sure.

I asked Jess about this. This was her response: “We’re sometimes asked whether we’re destroying the creative writing process. [But] A job post isn’t always the correct place for creative writing. It’s a document to get good people in and less good people out. What sounds differentiating to one person may not to another.”

I’m not entirely convinced a job ad is merely a working document, devoid of any color. If you can’t have some fun in job ads, then I’m not sure what the point of them are. They do need to demonstrate the ‘vibe’ of an organization (in my view).

Jess added that the aim of the AI isn’t to skew the copy to one demographic over another, but to make it fair, and to level the playing field.

Again, I’m not sure whether this is totally the best direction to go in. Aren’t jobs supposed to attract/target specific groups people?

To its credit, Datapeople says it wants all qualified candidates who see this ad and not be put off by it, and think they have a fair crack of the whip, so maybe I’m wrong about this last point.

I also had a concern that in chasing the 85 score, it’s this task that becomes the goal, not creating the best ad. But Jess was keen to re-emphasize this tool’s aim of being a recruitment-leveler.

Finally…Could some businesses find it impossible to hit 85 – for instance by not having the specific benefits that really raise the score?

Jess says there’s “no reason” why most job ads can’t meet this threshold. “Companies don’t need to be perfect; there’s plenty of wiggle-room in the scoring,” she says.

In summary:

So there you have, a job ad assessment tool that promises to massively improve the written content of ads, and make them more appealing to more qualified people.

In summary, I think this is achieved, and it reminds recruiters of all the rules they need to consider when writing an ad.

Jess argues job ads are “technical documents powered by hiring outcomes,” and that everything else is secondary.

But does striving for this, at the expense of injecting some creativity really make for a ‘better’ job ad?

Maybe it does.

I’m still a little dubious on that front, but one certainly can’t fault the noble aim of wanting ads to reach people who might typically feel excluded or discouraged from applying by badly written ads – even if they are perfectly qualified. How many times does that happen? This tool certainly wants to help recruiters stop that.