It was shenanigans last week about OpenAI’s controversial CEO Sam Altman (sacked, moved to Microsoft, then triumphantly returning – all within 72 hours), that briefly threatened to spoil the party.

But today the party is back on – because ChatGPT, the poster-company when it comes to artificial intelligence, is officially one year old today.

To say it’s been a helter-skelter year would be something of an understatement.

Since hitting an unsuspecting public on 30th November 2022, its uptake has been nothing short of phenomenal.

What started as a tool to speed up bedroom essay writing and create short text prompts has evolved into a technology that’s morphed into being used by 92% of Fortune 500 companies.

At its first developer conference just a few weeks ago, came the announcement of the imminent arrival of GPT-4 Turbo (a super-charged versions of GPT-4, its latest language-writing model), as well plans for next iteration, GPT-5, and ways users can customize their own versions of it.

With all these moves intended to be new ways of monetizing the predominantly free-to-access business, and return some of the $10 billion Microsoft has already invested in it, it’s safe to say that this is a business that having got the first twelve months under its belt, it setting itself up for a very long future.

What it means for HR

But for HR professionals, this has been a year where it’s been difficult to gauge what to do about it.

To give just a taste of its phenomenal growth, you only have to look at some of the numbers it now boasts to understand just how scarily pervasive it has become – and so quickly too.

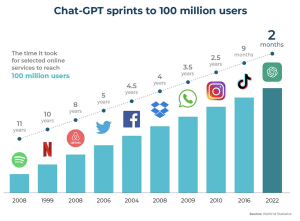

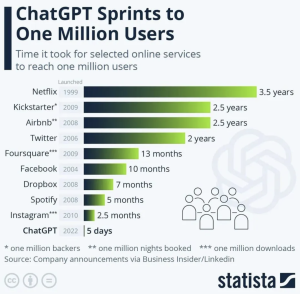

The technology took just five days to reach one million users, and it now claims to have more than 100 million active weekly users. Just compare this to Facebook’s growth rate: it took four and a half years to reach these sorts of numbers.

Today, monthly visits to the ChatGPT website now top 1.5 billion, with 31% of users being in the US.

HR fears about getting left behind

Stats like this have put many organisations into a tailspin about if, when or how they should integrate ChatGPT as a way of boosting productivity or cutting headcount.

Fear of getting left behind is a major concern – especially with recent data from Statista finding that around a quarter of early adopter US companies it questioned had already saved $50,000 to $70,000 by using ChatGPT.

These early users have great confidence in what they’re finding – not least because other research which suggests around 20% of people think text presented to them by ChatGPT was actually written by a human.

And then, of course, there’s been some high-profile announcements: Last month, ‘Big 4’ consultancy PwC revealed it had teamed up with OpenAI to offer clients advice generated by AI.

One big question for HR professionals remains though – and it’s not just whether the integration of ChatGPT will transform work and reduce headcount. It also concerns whether it requires a whole new change of direction for employee training and development – on how employees will in future need to work in collaboration with ChatGPT.

From a productivity point of view, research already suggests that 36% of employees say they find ChatGPT useful for writing content, while 33% say it is useful for analyzing data and information; 30% use it for customer support and 27% for brainstorming new ideas. Microsoft will itself use the latest version of the product, GPT-4, to automate Excel, PowerPoint, Outlook and Word.

Significantly though, as well as training being needed for staff about how to use ChatGPT, commentators also suggest there will also be a re-training requirement around how work itself and people’s jobs could be impacted by this technology. And this is a much bigger issue to chew over.

Research by learning management platform provider, Epignosis, suggests 35% of US employees say their work responsibilities have already changed due to AI tools, adding that 49% of employees say they need training on using AI tools like ChatGPT in order to do their job. But it argues only 14% of staff have actually had ChatGPT training so far.

The choice is yours

So where should CHROs be putting their focus?

HRDs – as well as employees themselves – have every right to be fearful of ChatGPT as they are to be helped by it.

Complicating issues is the fact that on the one hand, the young people employers want to employ are already using it – but not necessarily in the way recruiters may like.

According to recent research by ResumeBuilder, 46% of applicants are actively using ChatGPT to write resumes and cover letters. An astonishing 78% of jobseekers secured an interview when using application materials written by the AI, and 59% were eventually hired. This has led to some to speculate whether someone’s own language skills or their ability to write, or create reports are being misrepresented by the very technology they’re using – making them look artificially better than they really are in recruiters’ eyes. How can HRDs access someone’s true potential when they’re simply able to farm it out to ChatGPT to write a pithy piece of text?

Then again, does it really matter if staff can’t do something themselves, if they can get ChatGPT to do it for them?

TLNT recently spoke to Genies – the avatar creating company founded by Akash Nigam. He’s spending a small fortune each month on ChatGPT Plus accounts for all of his 170+ employees, and says it makes his people more productive, and his company more profitable.

He says: “On the one hand, we just think it’s important that they learn about something that is disruptive,” he says, speaking exclusively to TLNT. “They use it for efficiency, but also to improve their skillsets.”

He adds: “It’s less about replacing work but using it to inform work – for instance using it to tell us what is trending, or to take people’s existing work and make it more creative.”

According to Nigam, people don’t just create work, and then ask ChatGPT to make it better. “It’s more providing nuggets, or using it as a form of inspiration,” he says.

And yes, this does mean barriers to entry for people into the workplace are lower – because he argues people can now just ask ChatGPT to do something they can’t. [he says one of his employees is actually using ChatGPT to teach him how to code].

But is this a problem?

Maybe it doesn’t have to be. As Nigam says: “ChatGPT is incredible, but we also have to remember, that we also still need to have critical human thought. Both can live together.”

What next?

So, one year down the road, what’s really next for ChatGPT and for HR professionals?

It’s quite possible that ChatGPT can become a boon for HR as much as something they need to wary of.

For instance, ChatGPT can help identify underlying reasons for employee turnover by analyzing HR data, such as exit interviews employee surveys, or feedback. It can screen candidates; it can provide personalized responses to employee questions, offer company policies and benefits guidance, or enable better communication between HR and employees. In other words, it can deal with just the sorts of HR inquiries that typically bog HR professionals down, and enable them to spend more time on strategic activities.

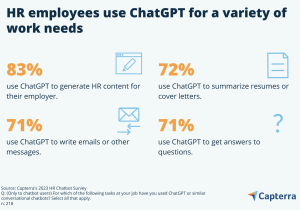

It’s already the case that one fifth (17%) of HR professionals claim to have already used the chatbot app for certain HR tasks, while 20% have used it for “something else” at work (via a recent LinkedIn poll).

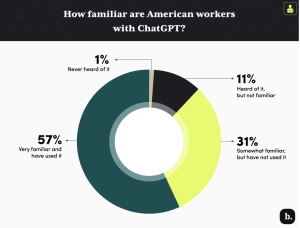

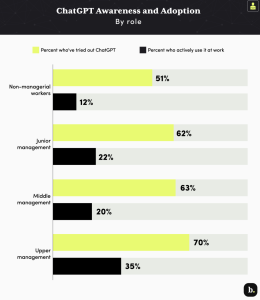

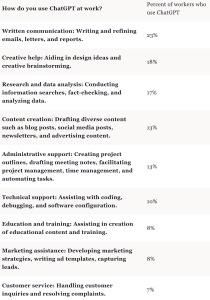

It’s also already the case that 57% of American workers have tried ChatGPT, and 16% regularly use ChatGPT at work according to Business.com. Managers use it particularly, and the list of tasks it’s being used for is growing all the time (see below).

But not only must HR must be decisive to avoid being left behind, there there are risks it needs to weigh up too – not least with bias and it providing incorrect information.

It also needs to put policies in place about just how ChatGPT is going to be used.

The simple fact is, Chat GPT isn’t intelligent. It has no ideas of its own. They are all based on existing ideas. As such, there is a real danger ChatGPT could present both HR and employees alike with inaccurate data – which could be reputationally bad, and/or hugely costly.

By OpenAI’s own admission, ChatGPT “sometimes writes plausible-sounding but incorrect or nonsensical answers.”

Research by Capterra finds only 29% of HR workers who have used ChatGPT or other generative AI chatbots for work always ask the chatbot to cite sources for the content it provides.

This could be dangerous.

In one very simple experiment earlier this year, Capterra researchers asked ChatGPT what the minimum wage was in California.

It ‘replied’ saying as-of 2021 the minimum wage is $13 an hour for employers with 25 or fewer employees, and $14 an hour for employers with 26 or more employees.

It was only when researchers asked for a source, that it returned the website address for California’s Department of Industrial Relations. This did have the correct and up-to-date minimum wage information – but it was different from ChatGPT’s initial, incorrect response.

As Capterra noted: “ChatGPT can’t provide up-to-date information after 2021, such as the fact that California’s minimum wage is actually $15.50 for all employers as of 2023.”

With any one-year old business, there will be a lot of growing up still required for users to have complete faith in it.

Perceptions of what ChatGPT is able to do may differ, but what certainly isn’t up for debate, is that this technology isn’t going to be going away any time soon.